How to Create a PostgreSQL Delta Table in Google BigQuery

This is a legacy platform changelog. The information reflected here may not represent current functionality and some links may be broken.

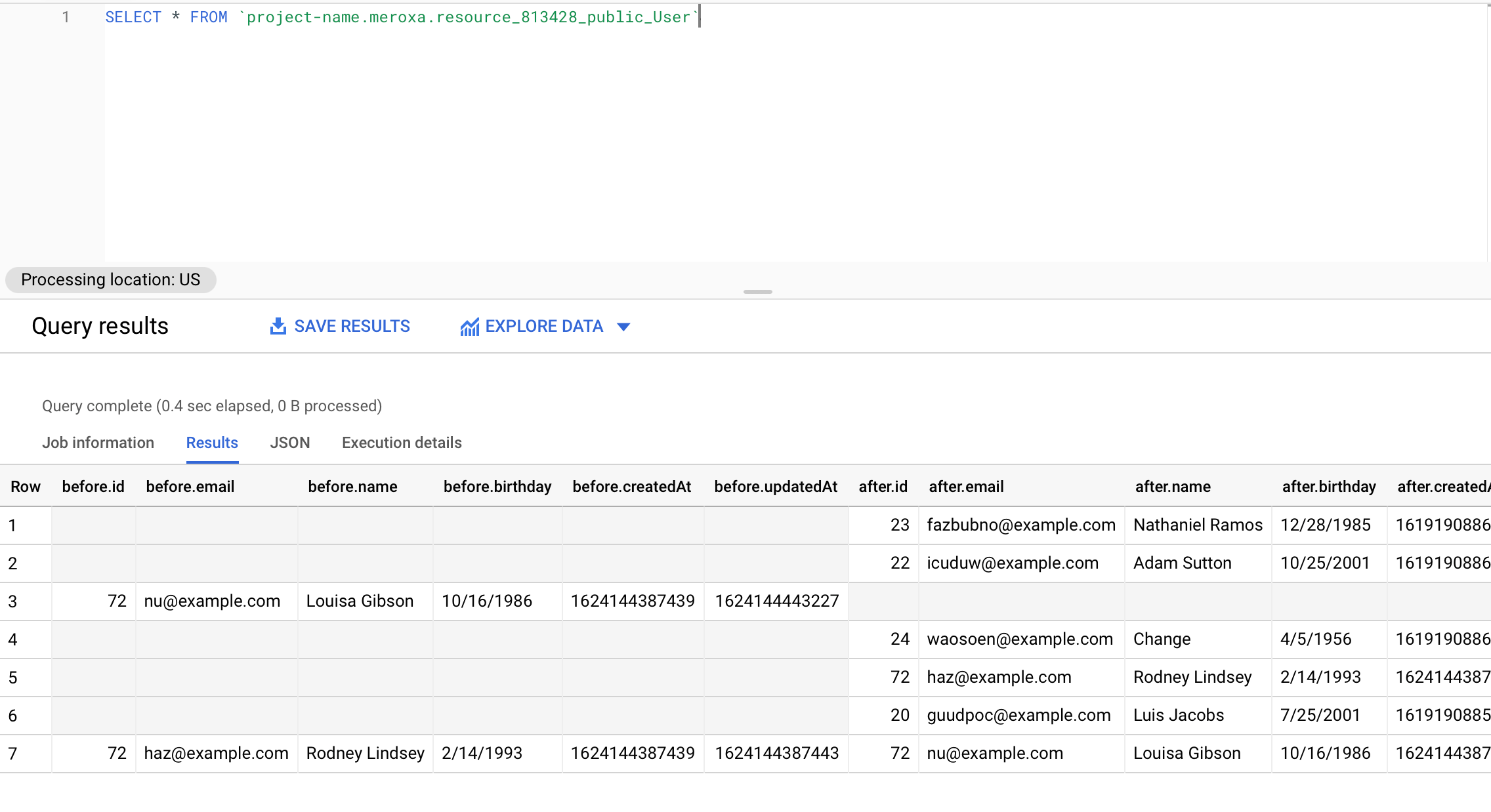

In this guide, we will create a real-time data pipeline to create a delta table in BigQuery of changes from a PostgreSQL database using Meroxa. This pipeline will create, store, and update a table within BigQuery that contains a row for every insert, update, and delete operation from PostgreSQL.

How it works?

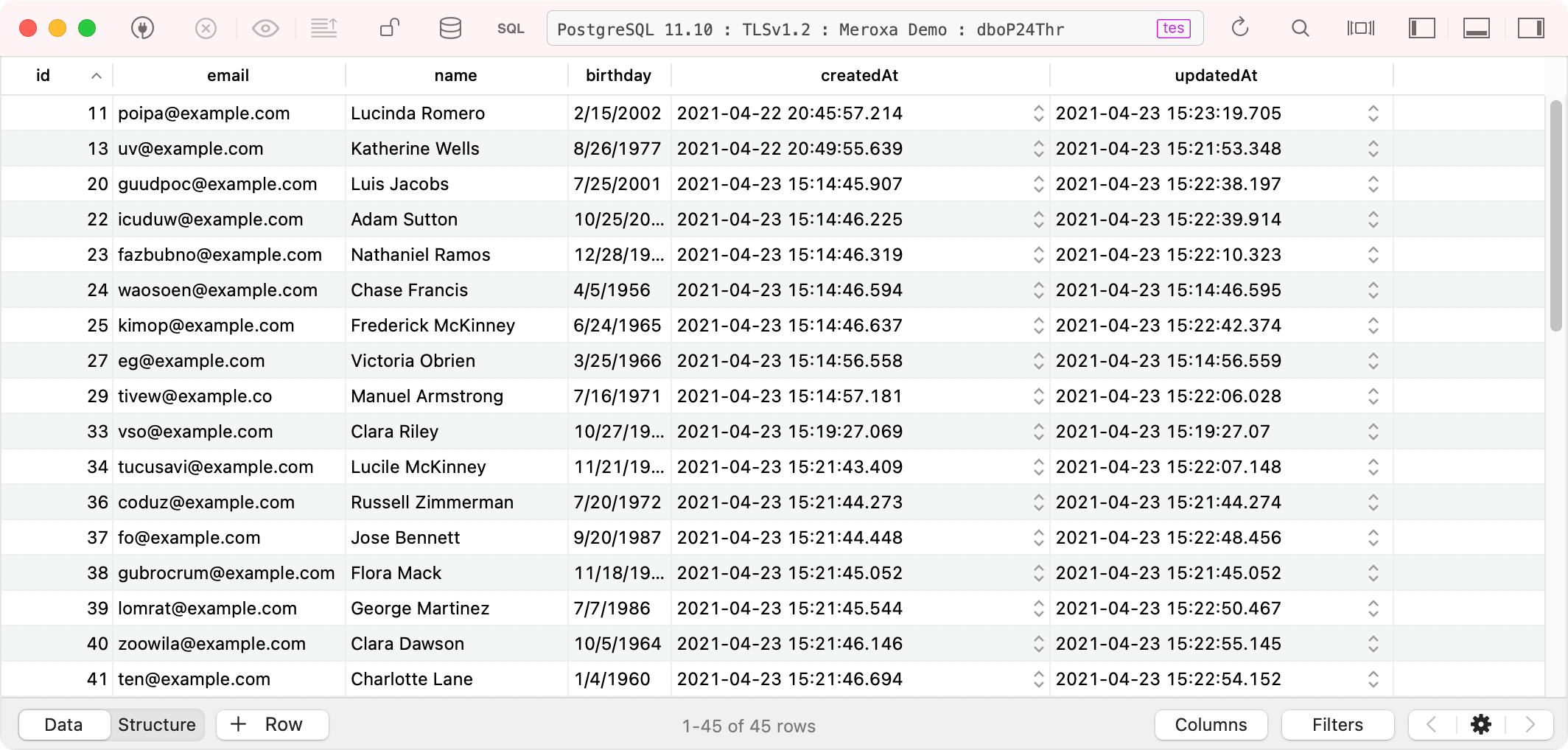

For this example, we have a PostgreSQL table called public.Users. This table includes data such as name, email, etc.

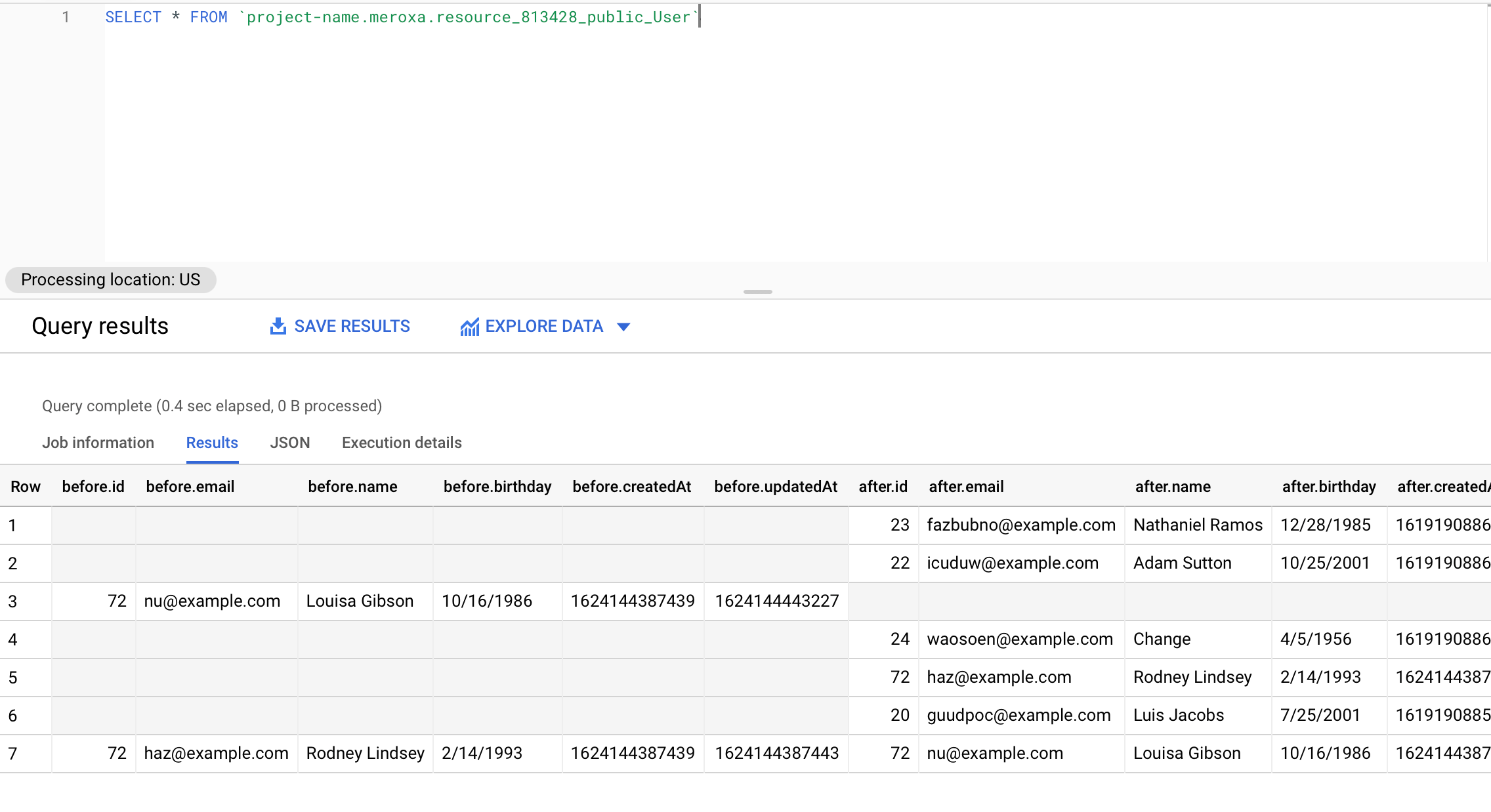

The goal of the pipeline is to replicate and produce a table of the changes in BigQuery:

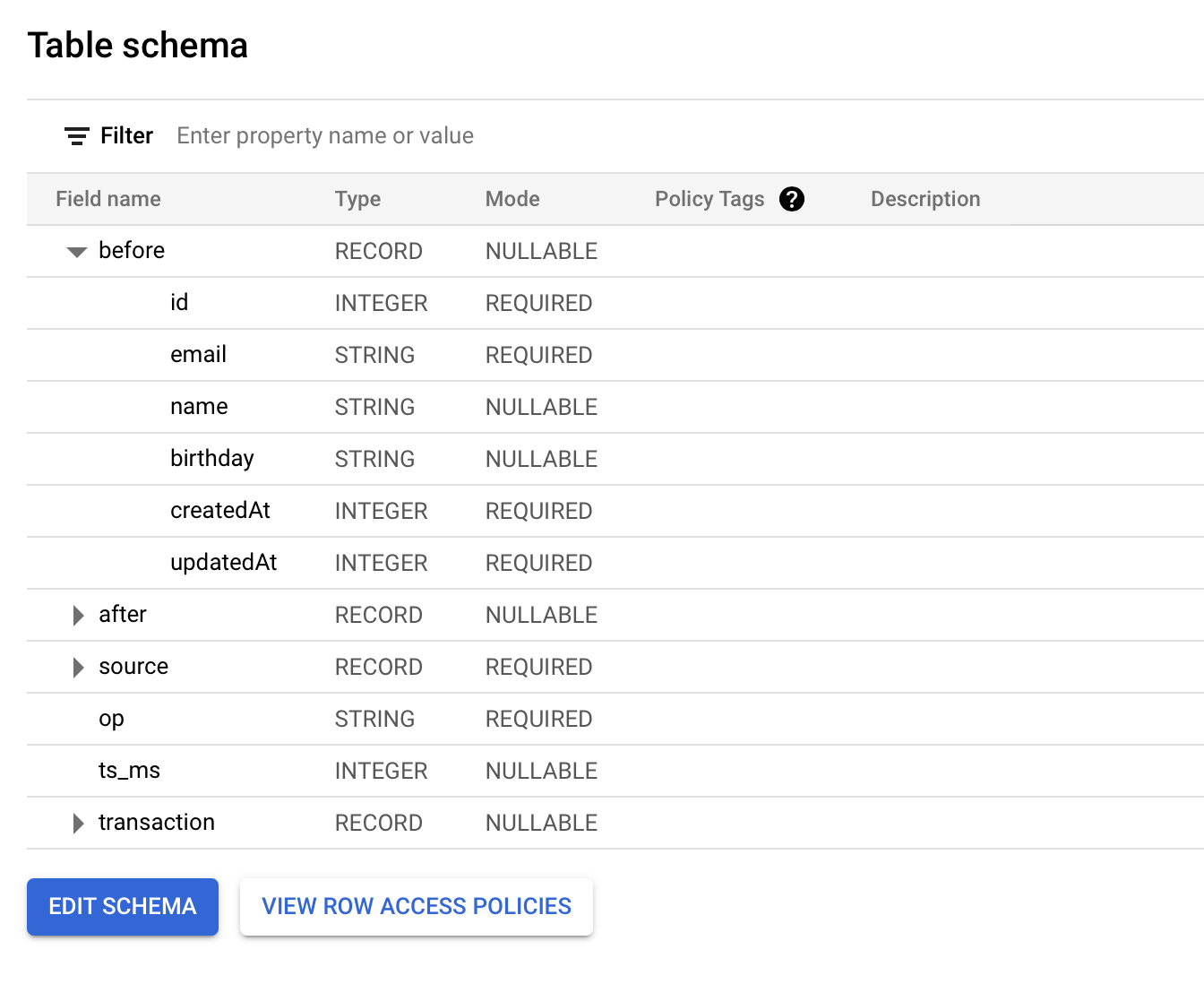

The schema of the created table will be defined as followed:

As you perform INSERT, UPDATE, and DELETE operations to PostgreSQL, the table within BigQuery will be kept in sync in real-time.

Let's build.

Prerequisites

Before you begin, you need:

- Download and install the Meroxa CLI.

- PostgreSQL Database (e.g., Amazon RDS) that can support logical replication.

- You must have a Google Cloud Platform (GCP) account that has billing enabled for BigQuery.

Adding a PostgreSQL Resource

To begin, we can add a PostgreSQL resource to the Meroxa Resource Catalog as the source of our data.

- Before you can add, the PostgreSQL instance needs to be accessible by Meroxa. You may:

- Connect directly and only allow Meroxa IPs.

- View environment-specific instructions (e.g., Amazon RDS).

- Use the

meroxa resource createcommand to create a new resource:

$ meroxa resource create my-postgres \

--type postgres \

-u postgres://$PG_USER:$PG_PASS@$PG_URL:$PG_PORT/$PG_DB \

--metadata '{"logical_replication":"true"}'

In the command above, replace the following variables with a valid PostgreSQL Access URL.

Now, your new PostgreSQL resource is available in your Resource Catalog. You can view your resources with meroxa resource ls or in the Dashboard.

Adding a BigQuery Resource

Next, we can create a BigQuery resource.

-

Before you can add, the BigQuery instance needs to be accessible by Meroxa. You'll need to have:

- GCP Project ID - A Google Cloud Project ID.

- BigQuery Dataset - A BigQuery dataset (see BigQuery Setup).

- Service Account JSON - A valid Service Account credentials with access to BigQuery (see BigQuery Setup).

-

Use the

meroxa resource createcommand to create a new resource:

$ meroxa resource create my-bigquery \

--type bigquery \

--url bigquery://$GCP_PROJECT_ID/$GCP_DATASET \

--client-key "{ $GCP_SERVICE_ACCOUNT_JSON }"

In the command above, replace the following variables with valid credentials from your BigQuery environment.

Now, BigQuery is available in your Resource Catalog.

Connecting Resources

Now that we have a source, Postgres, and a destination, BigQuery, in our catalog, we can connect them together.

meroxa pipelines create postgres-to-bigquery

- Add a source to the pipeline with

meroxa connector create:

meroxa connector create from-postgres-connector \

--from my-postgres \

--input $POSTGRES_TABLE_NAME # ex. public.Users \

--pipeline postgres-to-bigquery

- Capture the output stream name:

Streams are created when you create connectors. Source connectors produce output streams and destination connectors produce input streams.

To see the available streams for your connectors, you can use meroxa resources list:

$ meroxa resources list

ID NAME TYPE STREAMS STATE PIPELINE

===== ===================== ====================== ================================= ========= ==========

925 postgres-571-432074 debezium-pg-source output: running default

resource-571-811818.public.User

926 webhook-239-324695 http-destination input: running default

resource-571-811818.public.User

933 to-bg bigquery-destination input: running default

resource-571-811818.public.User

- Add a destination to the pipeline:

Now, we can use the meroxa resources create command to add a new destination connector:

meroxa connector create to-bigquery-connector \

--from my-biqquery \

--input $STREAM_NAME \

--pipeline postgres-to-bigquery

Once your connectors are "Running", Meroxa will load all of the current table data into BigQuery, then keep it up to date.

To view, we can go to BigQuery in the GCP Console and query our new deltable database:

What's Next?

You now have a running data pipeline! As you perform INSERT, UPDATE, and DELETE operations, BigQuery will be kept in sync in real-time.

A great next step is to browse other resources to extend your existing data app or create new ones.

As always:

- If you need help, just reach out to [email protected]

- Join our Discord community.

- Follow us on Twitter.