Google BigQuery

Google BigQuery is a Google Cloud managed data warehouse designed to help store and analyze big data. It can be used as a downstream resource in your Turbine streaming apps by using the write function to select table in a dataset.

Setup

Prerequisites

You must have a Google Cloud Platform (GCP) account that has billing enabled for BigQuery.

-

Create a new GCP Service Account to create a secure authentication Meroxa.

-

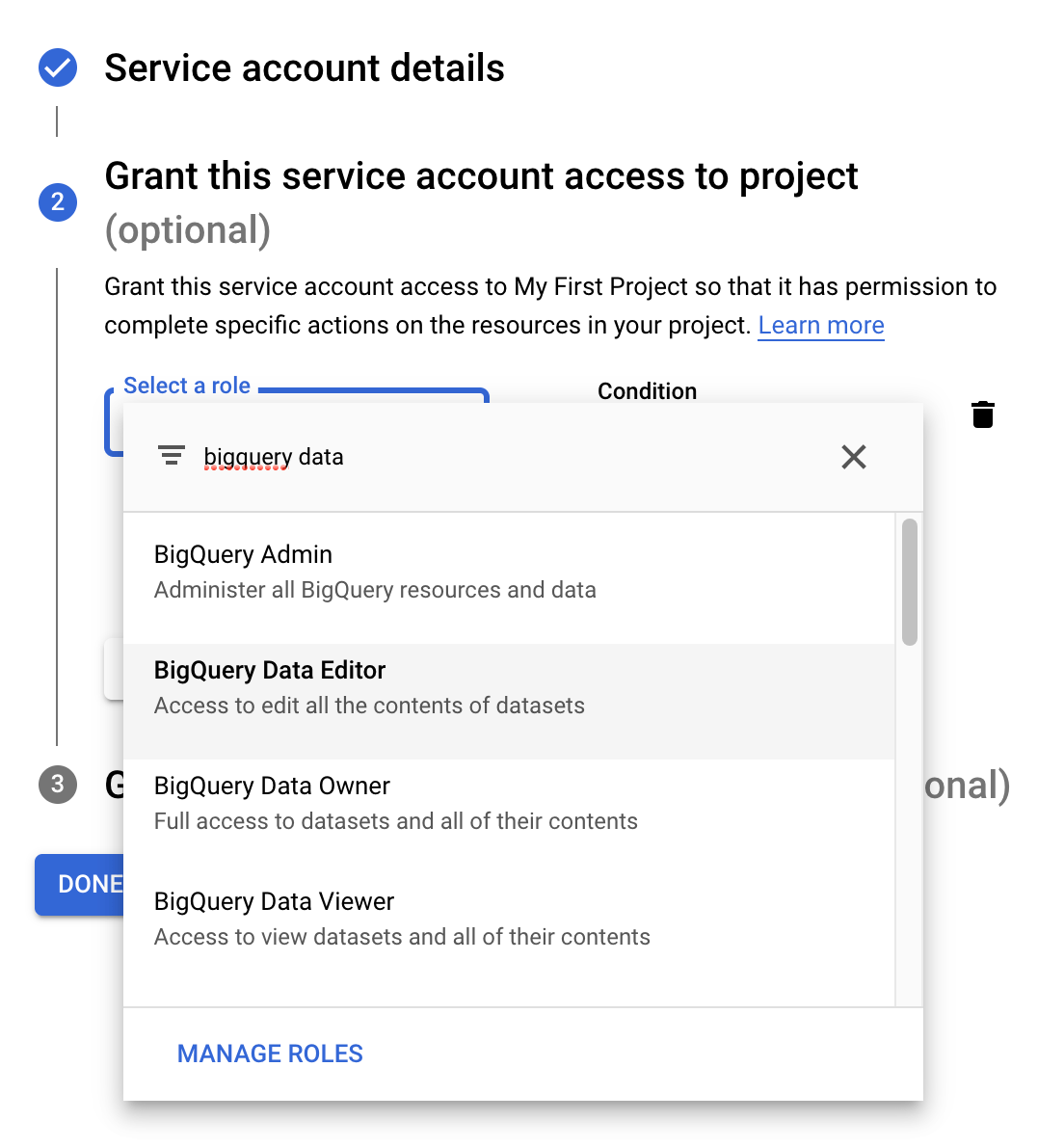

Give the Service Account the role of BigQuery Data Editor and BigQuery Job User.

-

Create a Service Account Key and download the credentials JSON file. You will need this file when creating the resource.

-

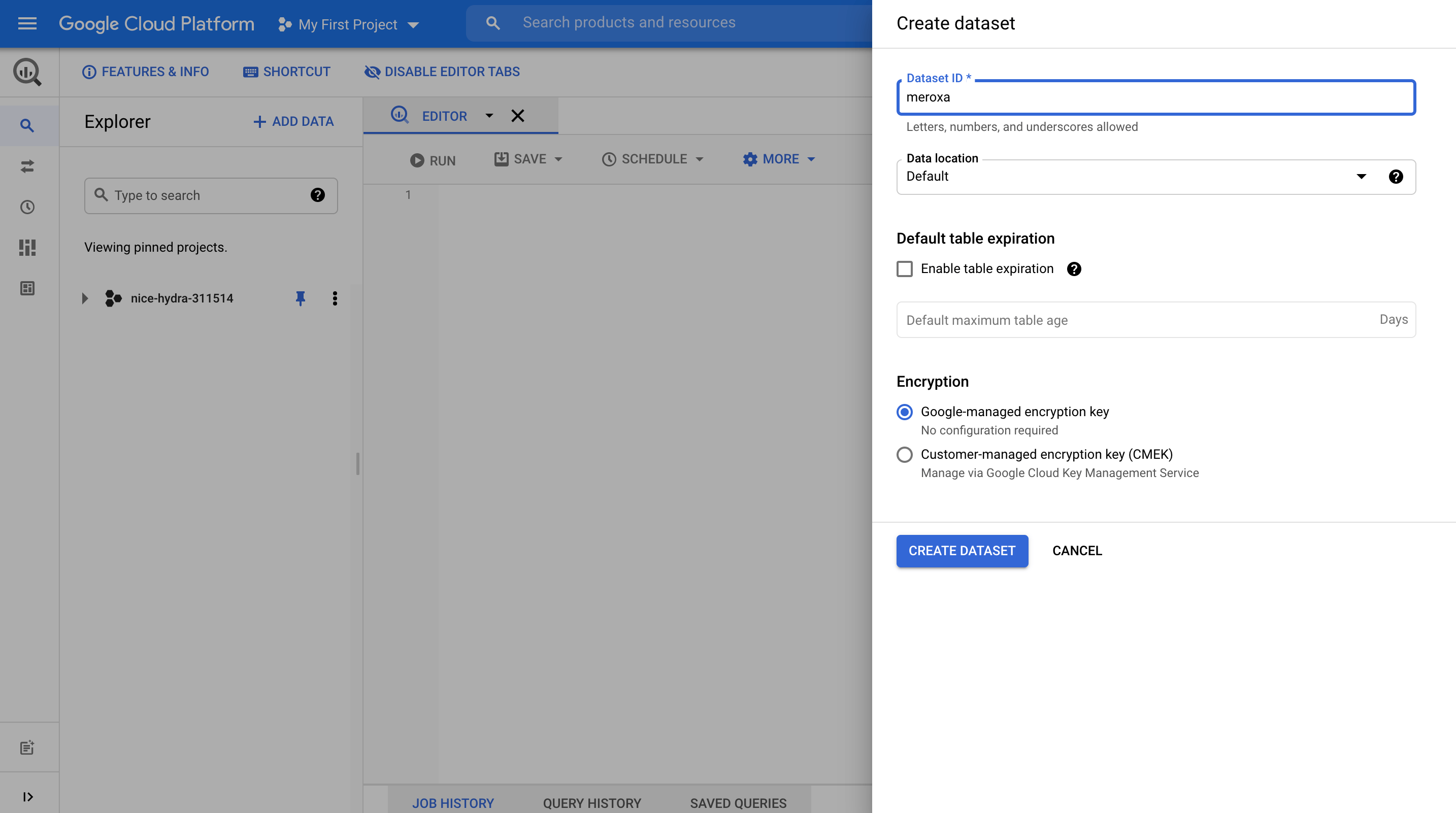

Create a BigQuery dataset that will contain destination data:

In the screenshot above, a dataset named meroxa is being created. You will need the dataset name when adding the resource.

Credentials

To add a BigQuery resource, you will need the following credentials:

- GCP Project ID - A Google Cloud Project ID.

- BigQuery Dataset - A BigQuery dataset (see BigQuery Setup).

- Service Account JSON - A valid Service Account credentials with access to BigQuery (see Prerequisites).

Resource Configuration

Use the meroxa resource create command to configure your Google BigQuery resource.

The following example depicts how this command is used to create a Google BigQuery resource named mybigquery with the minimum configuration required.

$ meroxa resource create mybigquery \

--type bigquery \

--url "bigquery://$GCP_PROJECT_ID/$GCP_DATASET_NAME" \

--client-key "$(cat $GCP_SERVICE_ACCOUNT_JSON_FILE)"

In the example above, replace the following variables with valid credentials from your Google BigQuery environment:

$GCP_PROJECT_ID- Google Cloud Project ID$GCP_DATASET_NAME- Your BigQuery dataset name. GCP console will indicate your dataset ID as "project_id.dataset_name". Just include your dataset name here (see BigQuery Setup).$GCP_SERVICE_ACCOUNT_JSON_FILE- Valid Service Account credentials with access to BigQuery (see Prerequisites)